In the latest extension of Facebook’s parental hand to shield us from ‘harmful’ content, the platform has announced that it will start filtering out photoshopped images on Instagram.

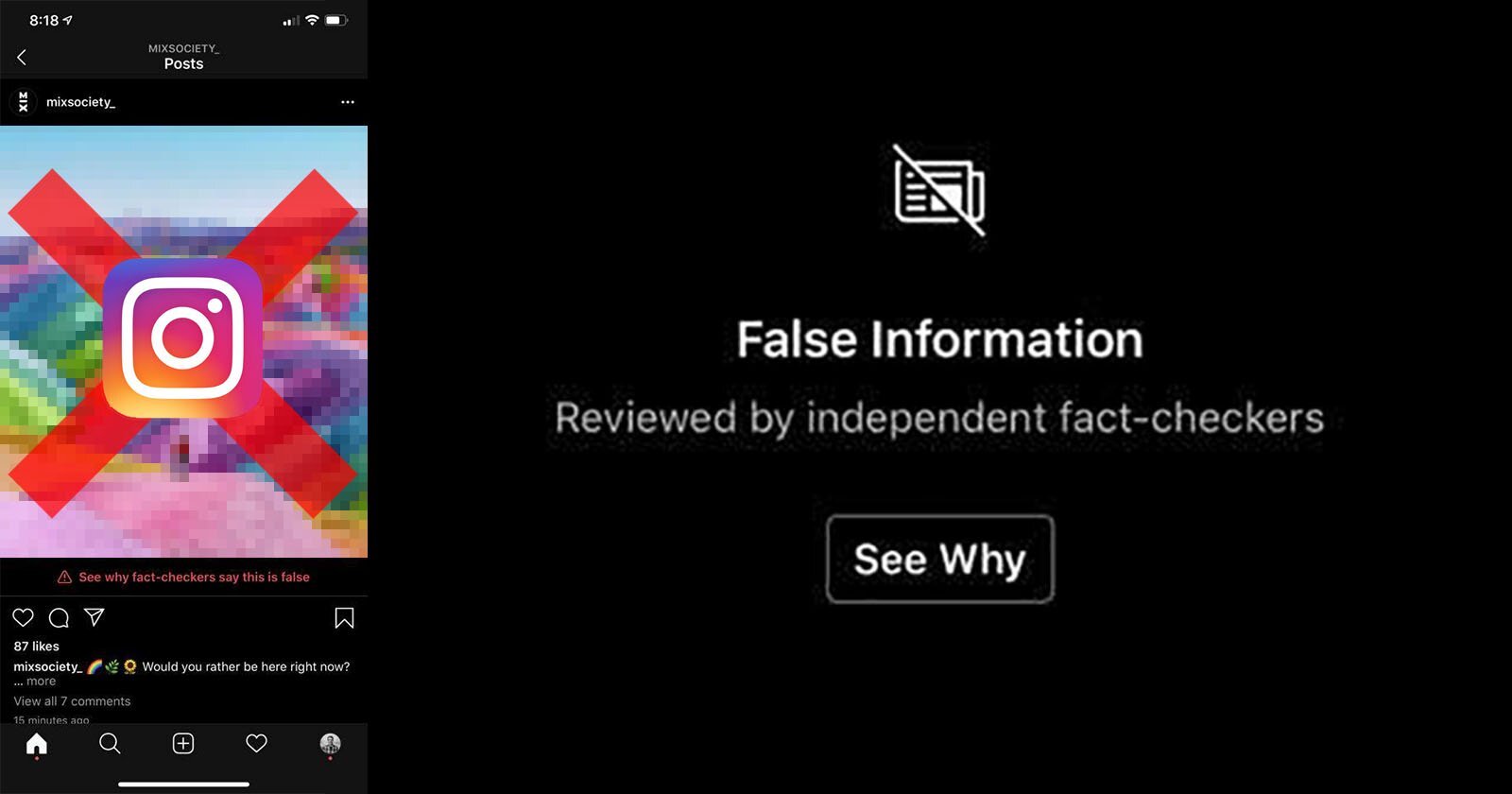

The feature, first discovered by San Francisco-based photographer Toby Harriman, will see suspect posts tagged with an ominous black ‘False Information’ banner. As with the platform’s current concealment of posts filtered out as ‘sensitive content’, users will have the option to view the blocked images, as well to learn more about the reasons underlying their flagging.

On the surface, it may sound like a progressive step in the social media giant’s drive to stem the flow of misinformation that pollutes its channels. For its numerous artists and creatives though, the move is a concerning one, with potentially profound consequences on how digitally-altered art imagery is circulated. In Toby’s case, for example, the censored content in question was an image of a man standing on rainbow-coloured mountains, prompting concerns that “Instagram x Facebook will start tagging false photos/digital art,” he wrote in a Facebook post. “I have a huge respect for digital art and don’t want to have to click through barriers to see it.”

So how exactly does the platform discern between ‘authentic’ and altered images? According to Instagram, it uses a vague-sounding “combination of feedback from our community and technology,” with earmarked photos then passed onto third-party fact-checkers to determine whether or not they’ve been doctored.

As earnest an initiative as this attempt to clean up our URL experience may seem, it smacks of disingenuousness in light of Facebook’s announcement late last year that it wouldn’t be fact-checking political advertisements in the name of free speech. While potentially nation-changing lies about the current political state of affairs are safe to consume, pictures of people standing on pretty mountains are not, it appears.